UC 3.2 Visual Product Inspection on Electronics

Prodrive and MEK Europe are working together on this evolving use case. As they combine their strengths to improve the project’s functionality and explore new opportunities, their collaboration demonstrates a shared commitment to progress and innovation,

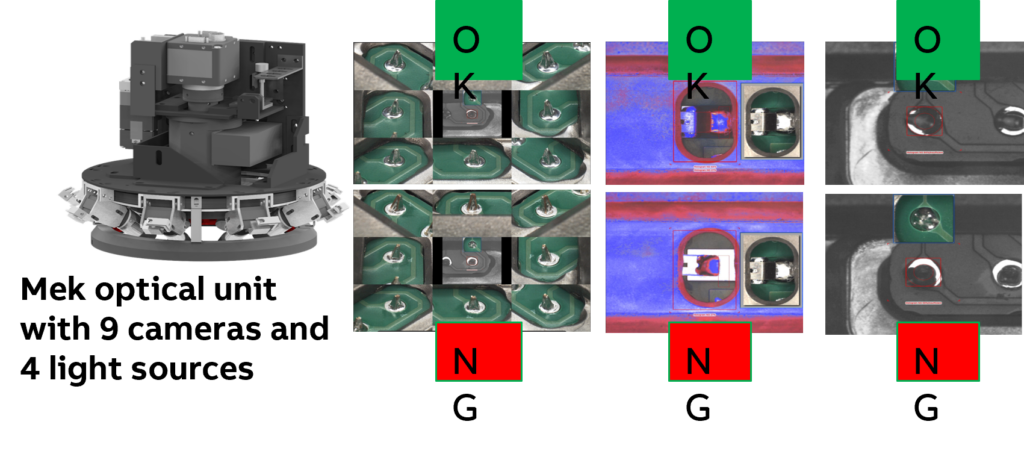

To guarantee high quality products, electronics manufacturing contains many inspection steps like after PCB assembly (using machines from MEK) or after the final assembly of the product.The inspection types include checks for presence, alignment, or damage.

Some are performed manually or by algorithms that do not use deep learning, which results in slip of non-conforming products, high false positive rates, and lowered traceability of defects.

Impact: Automating these inspections using state-of-the-art explainable deep learning algorithms is vital to support a scalable and sustainable electronics industry. This will allow for quicker introduction of new products, higher quality inspections, and shorter design cycles to improve existing production processes. Explainable results speeds up acceptation and adoption by generating trust in the models.

Current Status: Some initial deep learning (non-explainable) models are running in production on images of the final assemblies of products. Explainability will be added with the newly developed library and models with inherently explainable architectures will be trained on images of product markings and components on PCBs.

User Interface

Operators use several interfaces currently: One to perform manual inspection with work instructions (see image), One to perform (semi) automated inspection with cameras, Manufacturing execution system (MES) for product traceability.

New interfaces for feedback with explainability are required that integrate into their current workflow.Data from the existing interfaces and systems could be reused as additional data input and labels.

ML Model Summary

Two types of models using image data are used:

- Binary classification models (with EfficientNet[1] backbone). Fully black box, no explainability, Used in production for final inspections.

- Anomaly detection models (see anomalib[2]) that can generate anomaly heatmaps. Provides a heatmap at output, Still in an experimental phase.

Performance must be 100% recall and >99.5% precision.

Explainer Component Overview

There are various explainer techniques that have been evaluated for the binary classification models. Evaluation with operators has been performed on reference images, LayerCAM, and IG explainers:

- Combination of reference and LayerCAM most useful,

- Explanatory text on how to interpret explainers are preferred,

- Add inspection grades (required quality),

- Investigate textual explanations (LVLM/GPT)

Feedback Component Overview

The explainers have been integrated in the feedback interface (see below), where operators can either pass or select the defects present in a region. From the evaluation:

- Focus on failed or uncertain regions first,

- Use labeling data from existing processes,

- Use experience level of labeler for label quality assessment.